Half-Truth Tables and Abnormal Forms

The Hitchhiker's Guide To Logic

| Author: | Wolfgang Scherer |

|---|

Contents

Old News

- Truth table

- CNF/DNF, CCNF, CDNF

News

- Proper mapping of truth values for variables

- CNF of DNF

- SNF

Summary

If this article incites negative feelings in you, you may be better off waiting for the G-rated serious version of the satoku matrix.

Preface

Wikipedia's Law of identity cites Aristotle:

"First then this at least is obviously true, that the word 'be' or 'not be' has a definite meaning, so that not everything will be 'so and not so' ..."

and defines it as:

“each thing is the same with itself and different from another”: “A is A and not ~A”,

while Nine-of-Nine teaches:

- if A is, then it is that A is A

- if A is not, then it is that A is not A.

It can be funny to watch other people (including oneself) squirm when they wrangle with logic.

That the statement “each thing is the same with another and different from itself” seems non-sensical, just reveals one thing to be only half true, namely that “each thing is the same with itself and different from another” should be equivalent with “A is A and not ~A”. There is simply no equivalence here.

It rises from the common failure to distinguish a material implication from an equivalence. And it is much more common than commonly assumed. Since the common assumption - more often than not - does indeed fail to distinguish the two from each other.

So how about this?

“A is A and not ~A”implies “each thing is the same with itself and different from another”.“each thing is the same with another and different from itself”implies “A is ~A and not A”.

I personally find that beautiful and funny (in that order). But that's just a subjective judgement, which has nothing to do with logic.

The problem seems to be, that HOS (haters of self-referentiality) don't like the definition of logic to be self-referential. But that is also a subjective judgement, which has nothing to do with logic.

There is much ado about paradoxes stemming from self-referentiality:

This sentence is false.

And HOS pass laws over laws to avoid such nonsense (the class of all classes is a no-no!).

Here, the problem of future contingents rears its ugly head.

The liar's paradox can be found at the core of Gödel's incompleteness theorems.

As soon, as the interpretation of the sentence is done in discreet stages, a simple oscillator appears, that produces the infinite sequence of 0, 1, 0, 1, ... (which is just a regular sequence, and as sequence of partial sums for the sequence 1, -1, 1, -1, ..., it is quite useful to determine the cesaro sum).

However, almost nothing is said about seemingly harmless self-referential statements:

This sentence is true.

The perfect tautology, isn't it perfect! It is, isn't it!Well. Sure.But what if that sentence is actually false?Now what? --This one is related to Tarski's undefinability theorem.

And that is the problem with self-evident truths. There are none.

And there we are -- right in the middle of the ever-lasting epic battle of Good against Evil, Light against Shadow, Reason against Stupidity, True against False, Yin against Yang, Ping against Pong and Tom against Jerry.

And that is, why logic is a hairy beast (another possible interpretation of A is ~A: a metaphor).

General Semantics

Ignoring the results of General semantics leads to the kind of confusion caused by the interpretation of the verb "be".

Mathematics for Creationists

In the beginning there was chaos. And God saw, that it was good. But then He got bored and called the chaos Logic. The Lord spoke: "Let there be light". And He called the light Propositional Calculus. And He called the darkness Selection. And He let the light shine onto the darkness and called it Graph Theory. Then the Lord took a lump of clay and created Ma(thematicia)n in his image. He gave the Calculus and the Graph to Man. And the Lord forbade that Man ever make an image of ternary functions in Calculus as truth tables. Only binary functions shall be good functions from now on, because only they can be applied recursively upon themselves. The Graph shall be the only place to examine the darkness. And the Calculus shall not be merged into it.

Words

The following section is intentionally hard to understand. It illustrates a specific way of thinking and is meant to give the reader a hint, whether the rest will be easily digestable ‐ or not.

Indented blocks signify branches of thoughts (like branches in a graph/tree). Skip them to follow the main argument.It is not necssary to understand anything in this section, in order to understand why a truth table is only a half-truth table and why normal forms are not really normal. Just skip it, if you don't like it.

While trying to explain my research, I ran into the problem ‐ again and again ‐ that most words are already taken.

In order to label a new and unrelated concept the problem is eventually solved by simply replacing a word with another one. If the concept is an extension of an existing one, a qualifying adjective usually does the job. It is much harder, when a concept is paraphrased in absolute terms (e.g. "normal", "minimal") and when it becomes clear, that it is not so absolute after all.

Since I am an intuitive thinker (which may or may not be a contradiction in itself), I usually do not think in words. When trying to express a thought in words, I usually take the first one that comes to mind and slightly resembles the concept I wish to describe. Since I also have a sieve for a memory, I often use different words for the same concept over time. Especially when changing the perspective of the problem at hand. In object oriented programming this is called polymorphy and it is a good thing™.

A very peculiar effect of labeling a new concept with the words for an existing concept based on resemblance (A is A), comes from the fact that the opposite of a concept also bears that resemblance (and not ~A).

So it unfortunately happens (more often than I wish and desire), that the word for the opposite of a concept comes to mind first and I find myself talking about something using the words for the exact opposite of it. When I finally realize that everything I say leads to a contradiction, I mentally substitute the correct term into my previous statements and carry on.

Some of my documentation talks about conjunctive normal form using the words "disjunctive normal form", because I decided that the inner OR-operator is much more important than the outer AND operator. I corrected this, when I finally looked up what the rest of the world thinks about the matter, but it remains wrong in some very old texts.

When I communicate this epiphany by resolving such a situation with the words:

"Yes, the word is wrong. But it does not matter ‐ it is really the same thing, only just the opposite.",

I expect people to understand what I mean, not what I say. It is the funny look I get time and again, which makes me understand, that it is hard to follow my thoughts sometimes.

However, if this happens to somebody else, I usually do not even correct them, as long as I can follow the argument. It is only, when I can no longer reconcile the verbal statements with the logical conclusions, that I ask, whether someone means black when they say black, or if they are actually talking about white.

This also elicits strange looks, which in turn are more informative responses than any lengthy explanation I might get, because someone thinks that I do not really understand what they are saying.

I know that I am not the only one with this specific deficiency, because sometimes I do not get a strange look, but someone actually considers the possibility that he used the word inappropriately and just asserts the intended use of it. No question arises, why I would even ask such a question.

As it is the very nature of logic, that the negation of a contradiction is a tautology, I really do not have a problem with that situation. As a matter of fact, for me a tautology and a contradiction are really the same thing, since they do work in the same manner. They both have maximum resistance against changes to the final result.

In both cases, it takes a while to find out what the final result will be. E.g., an unsatisfying regular 3-XORSAT problem can be made satisfying by negating a single variable in a single XOR clause. It's just a matter of (polynomial) time to find out which one. So in order to solve such a problem, you can either insist on it being a contradiction forever and a day, or you can change it a little bit to make it work.

Having Some Fun

Logic does not kill fun ‐ people do.

Here is a little personality test. Which of the following tables do you find most agreeable?

Concept : Logic : People Tautology : always true : right good useful serious Contradiction : always false : wrong bad worthless funny Concept : Logic : People Tautology : always true right : good useful serious Contradiction : always false wrong : bad worthless funny Concept : Logic : People Tautology : always false : right good useful serious Contradiction : always true : wrong bad worthless funny It all depends.

None of the above.

As with all personality tests, there is no wrong answer. So, the correct answer is e.

The point of this is the fact that there is much ado about exchanging all and/or/negation signs in logical formulas. But nobody ever talks about the fact, that you can simply exchange true and false and nothing happens. (This is not so easy with numbers if you actually perform arithmetic operations, so I will leave everything as it is. No law-breaking here.)

Anyway, if c) gives you heartburn that may indicate that you will have a problem with my style.

Back To Words

The semantics of the standard explanation of logic often assert absolute truths. When I gradually discovered, that some of these notions are incomplete, I found myself looking for new words that properly express this incompleteness.

This is really hard to do without reusing the existing expressions. So sometimes, I temporarily rename existing concepts and use the word for my new concepts. This may appear as funny, however, it is really just a cry for help in finding the correct terms.

What do you call a truth table, which provides more information than the "fully expanded truth table"? It should not even be possible, should it?

The satoku matrix evolved from "Full Clause Assignments" which are now called "Clause Vectors" and represent a partial assignment in positional clause variable matrix notation. Since it looked like a matrix and was used for resolving conflicts, I first named it "Conflict Resolution Matrix". Since "Resolution" is already used for the DPLL algorithm, I was unhappy with that choice, since the majority of algorithmic functions employed in the context of the satoku matrix have nothing to do with the DPLL resolution concept. So, when I found the adjacency matrix and the mapping of a CNF problem to an independent set problem, I used "Adjacency Matrix" for a while. When I realized, that this might cause expectations of graph theoretical algorithms I finally decided that it must get a completely different name. The closest algorithmic resemblance I could come up with, was "Sudoku" which begat "Satoku Matrix". And that is what it shall be called now and forever!

Mr. Spock and Nine-of-Nine

I blame Startrek and Mr. Spock for falling prey to the notion of emotionless logic.

And there I thought watching TV is not so harmful as it is made out to be.

As I found out, mathematics seems to be far more emotional than I assumed. There appears to be a deep affection for Her Majesty the Truth:

TRUE ⇔ relevantFALSE ⇔ irrelevant

But according to Nine-of-Nine, the fully expanded relevance table is:

| Relevance | Truth |

|---|---|

| RELEVANT | FALSE |

| RELEVANT | TRUE |

| irrelevant | FALSE |

| irrelevant | TRUE |

There is no need to be terrified by the monstrosity of fully expanded relevance tables. It is perfectly sufficient to work with truth tables, since the relevance of a statement can be determined by a simple local search algorithm in worst case polynomial time O(n5) , with a lower bound of best case time O(1) , by determining the truth value first and then applying the optimized relevance table:

| Truth | Relevance |

|---|---|

| TRUE | RELEVANT |

| TRUE | irrelevant |

| FALSE | RELEVANT |

| FALSE | irrelevant |

Conflicts aka. Mutual Exclusion

At the heart of logic is the mutual exclusion. Indeed, the only necessary information about a logical problem is the structure of mutual exclusions. There is no need to know the fancy names of variables, nor do you need to know which of them carry a negation and which don't. The symbols are just not useful for conveying structural information.

As I found out just recently, conflict is used in the context of CDCL (conflict driven clause learning) as the short term for an assignment that makes a CNF formula false:

Given an assignment A , a clause is said to be in conflict when all of its literals are false in Af [1].

What is it, with all the narrow absolute terms? I always wondered, how Microsoft came up with the method of implying the generally best solution by using generic terms "windows", "word", etc.So I will have to find another word again. I will probably go back to mutual exclusion, when I find the time and feel like it. For right now, I just declare:

If one thing excludes another and the other thing excludes the one, that is called a conflict.

[1] K. L. McMillan, Applying SAT methods in Unbounded Symbolic Model Checking, http://kenmcmil.com/pubs/CAV02.pdf, p. 4

That said, a general conflict can be described in terms of set membership. Two elements a and b conflict with each other, if they cannot be members of the same set M. They are said to be mutually exclusive.

Consider the propositions A and B about elements a and b of a set M:

A = a is in set M B = b is in set M

further consider the propositions Y and N:

Y = a and b are in the same set M N = a and b are not in the same set M

therefore:

Y ⇔ (A ∧ B) = T N ⇔ (A ∧ B) = F

The truth table for propositions Y and N is:

| A | B | : | Y | N |

|---|---|---|---|---|

| 0 | 0 | 0 | 1 | |

| 0 | 1 | 0 | 1 | |

| 1 | 0 | 0 | 1 | |

| 1 | 1 | 1 | 0 |

Examining the actual state of affairs, allows to assert the truth of propositions Y and N:

(A ∧ B) = F ⇔ Y = F, N = T ⇔ a and b are not in the same set M (A ∧ B) = T ⇔ Y = T, N = F ⇔ a and b are in the same set M

Although Y and N are complementary, which implies some sort of symmetry, a conflict is actually asymmetric, since the weight of each alternative is obviously different.

Now consider proposition X about mutual exclusion of elements a and b:

X = a and b cannot be in the same set M X ⇒ (A ∧ B) = F ¬X ⇒ T

This makes mutual exclusion a hairy beast, since it is only equivalent to N, when there is in fact a mutual exclusion condition. The absence of a mutual exclusion condition does not allow to draw any conclusion about set membership at all.

The truth table of N indicates that it is equivalent to the NAND operation. If a conflict only was that simple, it would be really great. However, the fact that two elements are not in the same set, does not imply that they cannot be.

Assume that a and b are mutually exclusive. Further assume that a and b are either very insubordinate or have just not heard about their mututal exclusion status. So assume, that they both meet in set M for a date. Clearly that cannot be the case. It is an act of pure evil punishable by death (proposition D), which ends their life (proposition L):

D = X ∧ A ∧ B L = ¬(X ∧ A ∧ B)

Logic really does not care about such matters, it stoically maps the situation to a truth table:

| X | A | B | : | Y | N | D | L |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 1 | 0 | 1 | |

| 0 | 0 | 1 | 0 | 1 | 0 | 1 | |

| 0 | 1 | 0 | 0 | 1 | 0 | 1 | |

| 0 | 1 | 1 | 1 | 0 | 0 | 1 | |

| 1 | 0 | 0 | 0 | 1 | 0 | 1 | |

| 1 | 0 | 1 | 0 | 1 | 0 | 1 | |

| 1 | 1 | 0 | 0 | 1 | 0 | 1 | |

| 1 | 1 | 1 | 1 | 0 | 1 | 0 |

This is the minimal, i.e. sufficient and necesary, definition of a conflict, when only known facts are considered. The asymmetry is now maximal. And all hope for a nice calculus or algebra goes down the drain. (Therefore the Law requires that selections be ignored in propositional calculus and that there should be no mention of the fact that graph theory has been invented to handle The Ugliness).

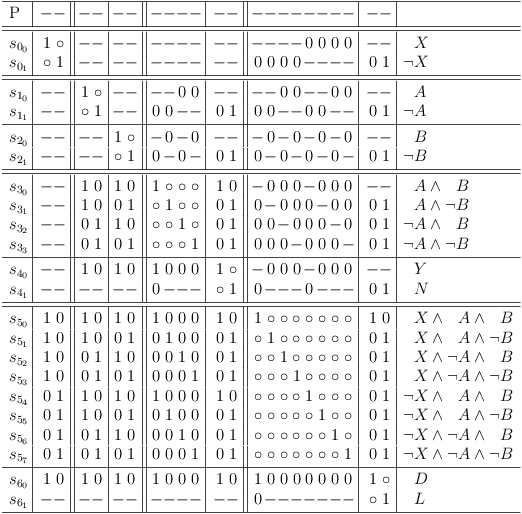

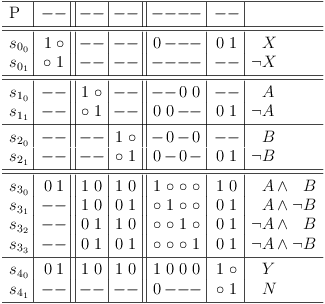

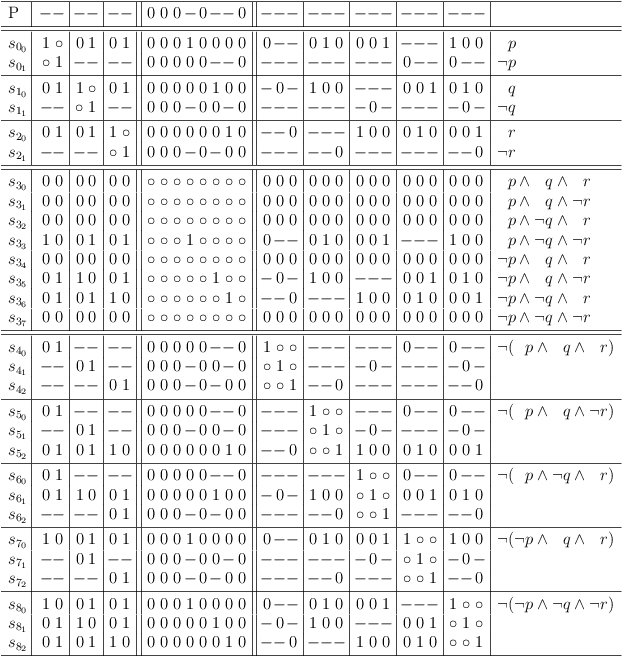

The propositions X, A, B, Y, N, D, L can be mapped to a satoku matrix:

Mapped conflict propositions

- X requires N (s004 = (0, 1) ),

- but ¬X does not require Y (s014 = (1, 1) ),

- whereas Y does indeed require ¬X (s400 = (0, 1) )

- and N does not require X (s410 = (1, 1) )

(X → N ⇔ ¬X∨N ⇔ N∨¬X ⇔ ¬N → ¬X ):

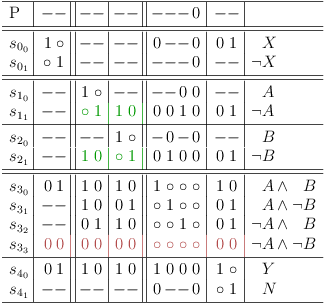

Immediate death penalty

Indicating a mutual exclusion condition no longer allows that A and B both become true:

Indicate mutual exclusion

Whereas independent set membership ¬X does not have any consequences for the possible combinations of A and B:

Independent set membership ¬X

As usual, the satoku matrix does not need the full truth table to work. Specifing the conflicts accurately is sufficient:

Specifing conflicts accurately is sufficient

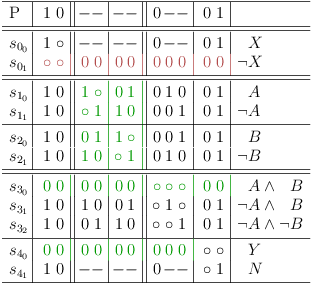

The next step enforces the proposition ¬(¬A ∧ ¬B), which requires at least one of A or B to become true:

At least one of A or B must be true

If A and B are mutually exclusive, A becomes equivalent to ¬B and ¬A becomes equivalent to B:

A is equivalent to ¬B, if mutually exclusive

And that is exactly, how a logical variable works:

- p and ¬p are mutually exclusive

- eventually one of p or ¬p must be selected

It is therefore a symmetric special case of a general conflict. Whether A is substitued by p and B by ¬p or vice verca is entirely arbitrary.

Basic Truth

Before discarding this concept as outright non-sensical or giving in to the tempation to forbid it because it is self-referential, please, consider that bivalent logic itself is a highly self-referential concept.

IMHO, the whole ordeal caused by the confusion of existentiality with the attribution of properties could be avoided, if the books about the calculus of propositional logic did not try to celebrate some mystical holy (axiomatic) process of creation, making truth values appear from meaningless propositional variables in meaningless formulas.

Instead of starting with the 1st level truth tables, you could just as well begin with a 0th level truth table about mutually exclusive values:

Variable : Values p 0 1 This table could be easily expanded to any arbitrary set of mutually exclusive values. The rest of multi-valued logics then develops just fine and without any mystical properties by simply extending the set of values. (I am aware, that ternary logic immediately collapses into a mystical process of determining appropriate NEG, AND, OR functions for a nice algebra).

Given the "Laws of Logic":

- Law of excluded middle (LEM): "either A or ~A"

- Law of identity (LI): "A is A, and A is not ~A"

- Law of non-contradiction (LNC): "not (both A and ~A)"

The Law of excluded middle (LEM) is rephrased:

- Law of excluded middle (LEM): "not (neither A nor ~A)"

The Law of identity (LI): is broken up in two parts:

- LI1: "A and (not ~A)"

- LI2: "(not A) and ~A"

p=T and p=F are statements about the truth value of a variable.

Assuming:

- A ⇔ p=T maps to 1

- not A ⇔ p=T maps to 0

- ~A ⇔ p=F maps to 1

- not ~A ⇔ p=F maps to 0

let's break the laws and map them into the following truth table :

| p=T | p=F | : | Logic | Interpretation |

|---|---|---|---|---|

| 0 | 0 | 0 | A variable cannot be something else (LEM) | |

| 0 | 1 | 1 | A variable is not True and it is False (LI2) | |

| 1 | 0 | 1 | A variable is True and it is not False (LI1) | |

| 1 | 1 | 0 | A variable cannot be both True and False (LNC) |

This is one way to have logic explain itself.

As far as I am concerned, that is exactly what happens anyway, no matter how much it is hidden behind laws and axioms. It is really not possible to understand laws and axioms without logic in the first place. Being asked to pretend that one cannot understand, necessarily requires that one can understand in the first place. If that is not self-referential, I don't know what is.

When I'm asked to pretend to not understand something, it is usually pretty easy for me, since I actually don't understand and therefore need not pretend. The hard part comes, when I am then expected to understand - normally after some lengthy explanation. Usually, I still don't understand. When I finally understand by going over the explanation again and again, exploring its consequences and so on, I find that the explanation would have been really, really helpful, if I had actually understood it in the first place.

This is why I present the satoku matrix here, as if it was already understood. Because that's the way it is. There is nothing in its construction or operations that is not already explained in arbitrary length elsewhere. So there should really be no need to explain anything at all. But I guess it is in fact hard to understand a poem with a dictionary alone.

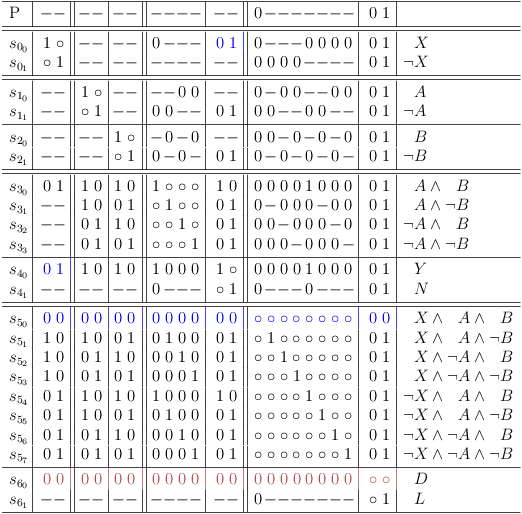

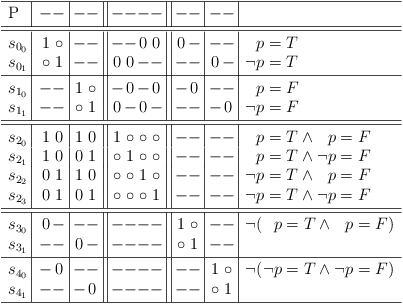

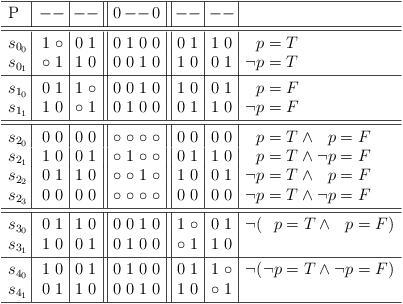

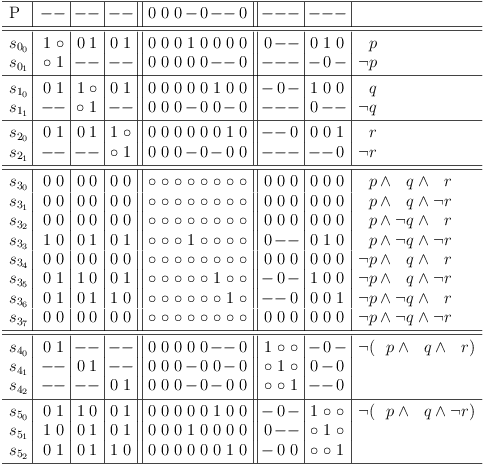

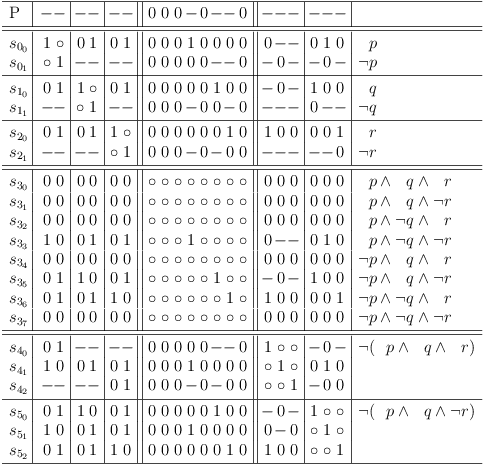

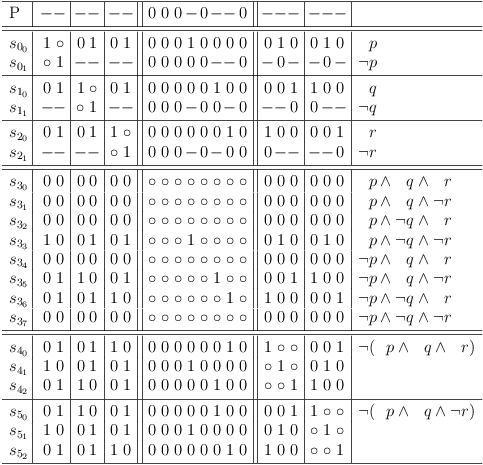

Accordingly, the plain mapping of Logic to a satoku matrix is therefore:

Satoku matrix for Logic

Following up on the requirements, the satoku matrix presents as:

Satoku matrix for Logic, requirements updated

After redundancies are removed, Logic reveals itself as:

Satoku matrix for Logic, redundancies removed

Which reads:

Logic is a little bit like Schroedinger's cat, you must determine whether p = T or whether that is not the case (p = F ), but as long as you do not look, anything is possible.

There is literature abound regarding LEM and whether or not it should hold. Personally, I never had a problem with LEM -- nor with the material implication for that matter (drawing conclusions from false statements can be a fountain of hilarious fun).

At a certain stage in my research I was pondering the idea of a ternary logic, since mutual exclusion and partial distributive expansion seemed to possibly hint at such a thing. However, I found that the use of two states (hard one and soft one) in the satoku matrix is just a convenient (but not even remotely necessary) way to track the requirement update state. It does not indicate the use of an entirely different truth value. The experiment in ternary logic therefore lead exactly nowhere and so I still have no reason to doubt LEM.

However, I do have a problem with LNC, specifically with the decision OCD resulting in the need to decide something right now, because it must be decided eventually. Doing so means driving full speed on the highway to future contingents.

I made a funny discovery when trying to explain the difference between a selection problem and a decision problem. When I say, that a selection from a clause is eventually made, some people insist that "the variable becomes true, and it is therefore the same thing". It seems suspicious that I agree to the variable becoming true in that case, but that I still insist on there being a difference between making a selection from a clause and making a variable true (equivalent to making a selection from a clause representing a variable). I must admit, that it sometimes is a little bit annoying that everything I say is simply dismissed as irrelevant.

I might be breaking bad, when I lift the "Law of Non-Contradiction" a little, but it is necessary to explain why I do not find too much value in the concept of atomic variables being different from the rest of the bunch.

OK, kids! Don't try this at home! It is really, really illegal and you can get into a lot trouble!

Since there is nothing unlawful in substituting logical terms for variables, it is probably less evil to make it happen in this manner:

Logic = ((p=T) ∨ (p=F)) ∧ ¬((p=T) ∧ (p=F)) (p=T) = q (p=F) = r ⇒ Logic = (q ∨ r) ∧ ¬(q ∧ r)

The term ¬(q∧r) is the "Law of Non-Contradiction", which is ignored for right now.

Assuming the following interpretation principles:

1 = No known facts contradict this possibility 0 = The known facts eliminate this possibility

the truth table for q∨r is:

| q | r | : | q ∨ r | Interpretation |

|---|---|---|---|---|

| 0 | 0 | 0 | The known facts do not support the statement | |

| 0 | 1 | 1 | The known facts eliminate the possibility of q | |

| 1 | 0 | 1 | The known facts eliminate the possibility of r | |

| 1 | 1 | 1 | No adverse facts are known, q is possible and r is possible |

... next stop is Vietnam:

q = (p=T) r = (p=F)

And it's 5, 6, 7, open up the pearly gates ...:

| p=T | p=F | : | Logic | Interpretation |

|---|---|---|---|---|

| 0 | 0 | 0 | The known facts do not support the statement | |

| 0 | 1 | 1 | The known facts eliminate the possibility of p=T | |

| 1 | 0 | 1 | The known facts eliminate the possibility of p=F | |

| 1 | 1 | 1 | No adverse facts are known, p=T is possible and p=F is possible |

Be the first one on your block, to have your boy come home in a box:

| p=T | p=F | : | Logic | Interpretation |

|---|---|---|---|---|

| 0 | 0 | 0 | A variable cannot be something else (LEM) | |

| 0 | 1 | 1 | A variable is not True and it is False (LI2) | |

| 1 | 0 | 1 | A variable is True and it is not False (LI1) | |

| 1 | 1 | 1 | A variable can be undecided (at least for a while), it may not even be necessary to decide it at all just make sure to eventually apply ¬(p=F ∧ p=T) to make it fit |

This is the logic of known facts. That LNC must hold does not mean that it actually has to be applied immediately. Rather, if the known facts do not eliminate a possibility, applying LNC for no good reason anyway is quite arbitrary and leads to the problem of future contingents.

In other words: It may be a known fact, that eventually LNC ¬(p = F∧p = T) must be satisfied, however, this fact does neither eliminate the possibility of p = T nor does it eliminate the possibility of p = F . Without further conditions, it is a pretty useless fact.

Half-Truth Tables

A truth table lists all possible combinations of truth values and therefore acknowledges the fact that stuff can be undecided. But it does so in the most implicit manner possible. Truth values are always explicit which emphasizes that a variable must be actually decided and hides the importance of undetermined truth values. A truth table is basically a list of possibilities and the result, when everything is said and done.

So it does show the truth, but really nothing but the full truth. I.e., it does not show anything else. And often enough, truth tables are quite oversharing.

So here is a half-truth table, which shows only as much relevant information as desired without sacrificing extensibility.

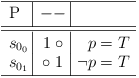

Variable Representation

Examining the details of a clause sub-matrix shows, that it consists of rows representing selections:

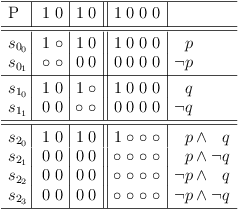

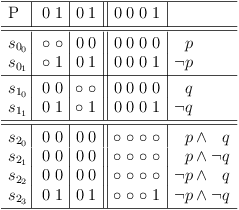

Unrestricted selection of truth values

This is sufficient to represent the "Law of Identity" p∨¬p .

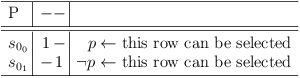

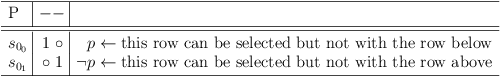

Conflicts are marked by setting the matrix cell value at the intersection of the conflicting selections to 0:

Restricted selection of truth values

This is necessary to represent the "Law of Non-Contradiction" ¬(p∧¬p) . Observe, that this condition does not have any immediate effect. It only causes effects, if a selection is actually made.

If a selection row becomes unselectable, the selection represented by the other row is enforced. This satisfies the condition of accurately representing known facts.

If both rows cannot be selected, the problem is unsatisfiable. This represents the "Law of Excluded Middle".

That's it ... Logic in a nutshell.

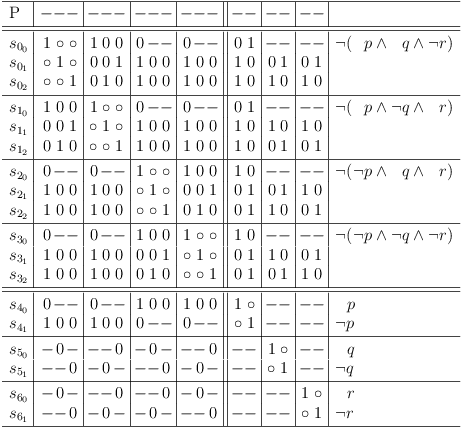

2-Variable Function Tables (Binary Functions)

Tautology (all combinations are allowed):

Disallowing all 4 combinations of a tautology results in a contradiction:

Exactly 1 Combination Impossible

This defines the well-known functions OR, IF .. THEN, NOR.

Disjunction, OR:

Implication, IF ... THEN:

As you can see, an implication is just a variation of a disjunction.

Alternative denial, NAND, Sheffer stroke:

There is 1 other variation of this type: (p∨¬q) , which is equivalent to the implication q → p .

Exactly 2 Combinations Impossible

Antivalence, XOR:

Equivalence, IFF ... THEN:

Assert p (q is independent):

There are 3 other variations which force a variable to obtain a single truth value: (¬p), (q), (¬q) .

Exactly 3 Combinations Impossible

Conjunction, AND:

Joint denial, NOR, Peirce's arrow:

There are 2 other variations which force 2 variables to obtain a single truth value combination: (p∧¬q), (¬p∧q) .

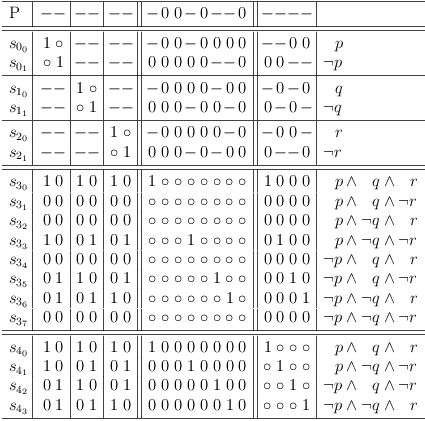

3-Variable Function Tables (Ternary Functions)

Contrary to popular belief, 3-variable functions are not just boringly combined 2-variable functions.

Especially noteworthy is the difference between a 3-variable XOR, 3 variable single selection and a 3-variable single selection which allows the "or none of the above" case.

The 3-variable XOR (p⊕q⊕r ) can be nicely constructed by performing a 2 variable XOR on the result of a 2 variable XOR for 2 variables p, q and the third variable r:

XOR(p, q, r) = XOR(XOR(p, q), r) = ((p ⊕ q) ⊕ r)

Although the 3-variable single selection X1(p, q, r) only differs in one additional constraint and therefore one less solution from the XOR, the concatenation is invalid:

X1(p, q, r) != X1(X1(p, q), r)

since for:

p=1, q=1, r=1

it follows, that:

X1(X1(1, 1), 1) = X1(0, 1) = 1

which is false.

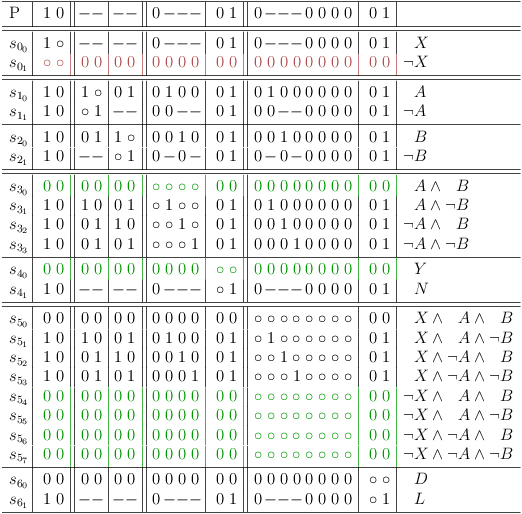

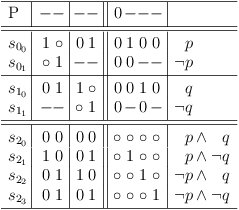

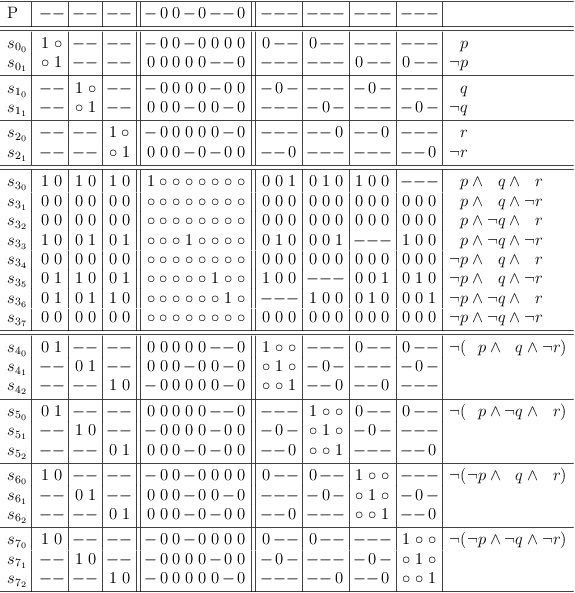

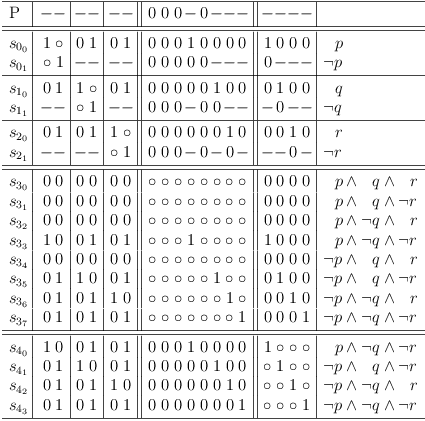

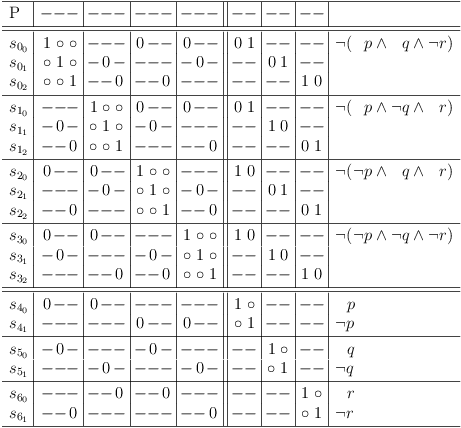

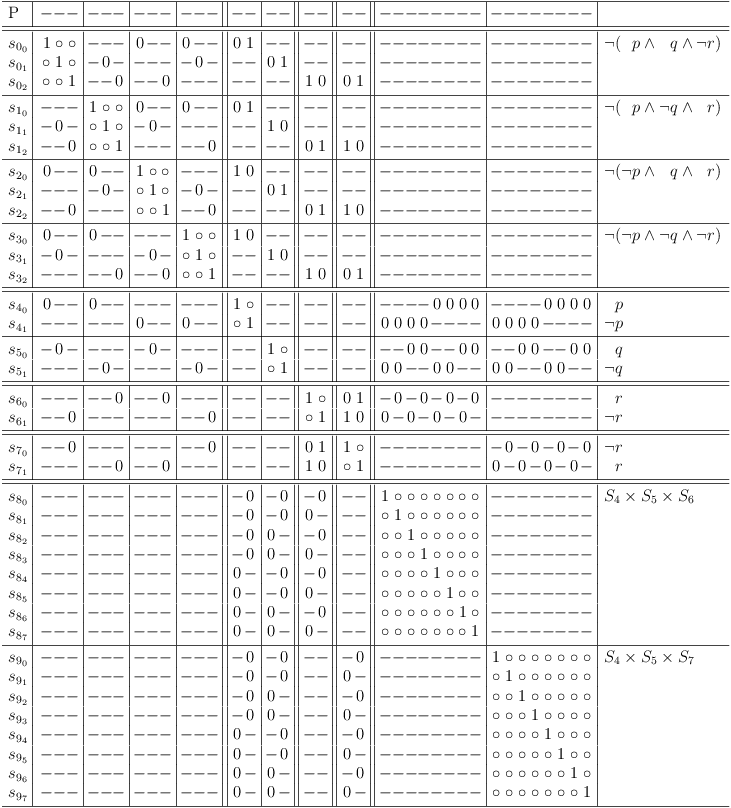

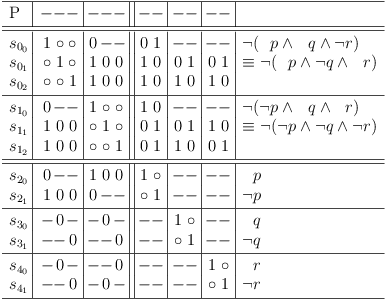

XOR 3 Vars

XOR 3 Vars

XOR 3 Vars from CNF:

XOR 3 Vars from CNF

XOR 3 Vars from CNF, RUA:

XOR 3 Vars from CNF, RUA

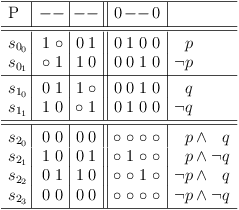

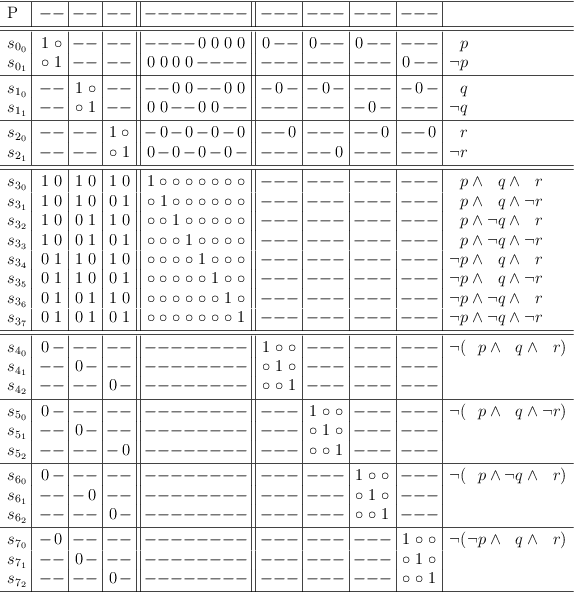

Single Selection 3 Vars

While 2-variable XOR and 2-variable single selection are identical, this is not the case for the k-variable versions with k >= 3.

Single Selection 3 Vars

Single Selection 3 Vars from CNF:

Single Selection 3 Vars from CNF

Single Selection 3 Vars from CNF, RUA:

Single Selection 3 Vars from CNF, RUA

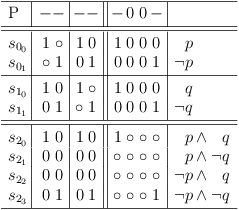

Single Selection or None 3 Vars

Single Selection or None 3 Vars

Single Selection or None 3 Vars from CNF:

Single Selection or None 3 Vars from CNF

Single Selection or None 3 Vars from CNF, RUA:

Single Selection or None 3 Vars from CNF, RUA

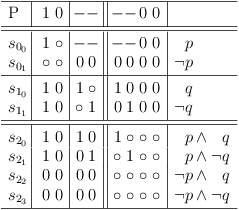

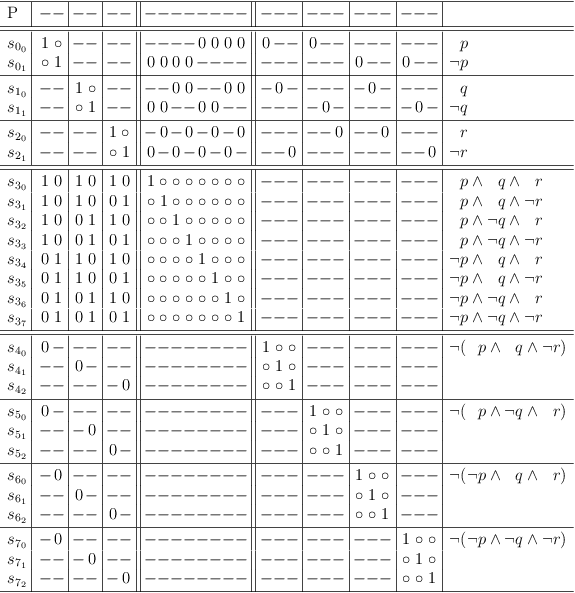

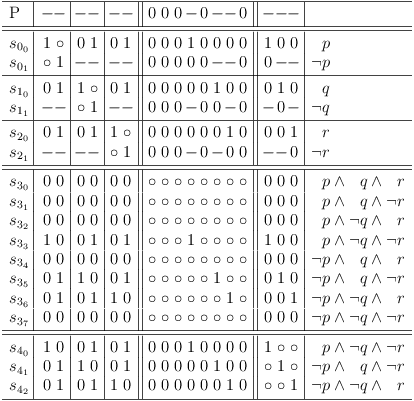

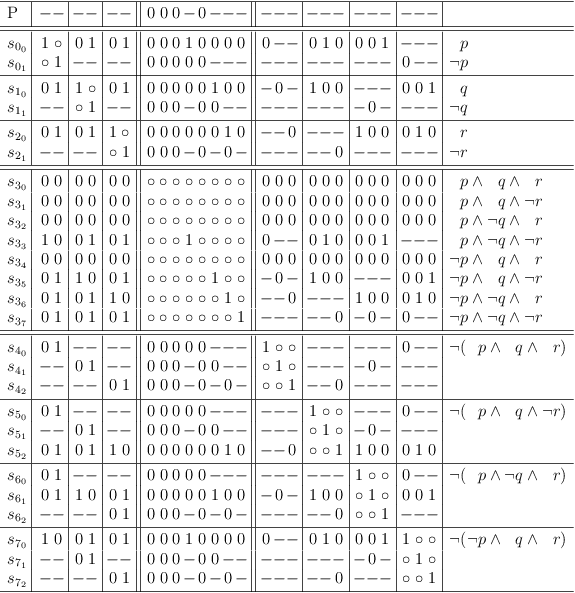

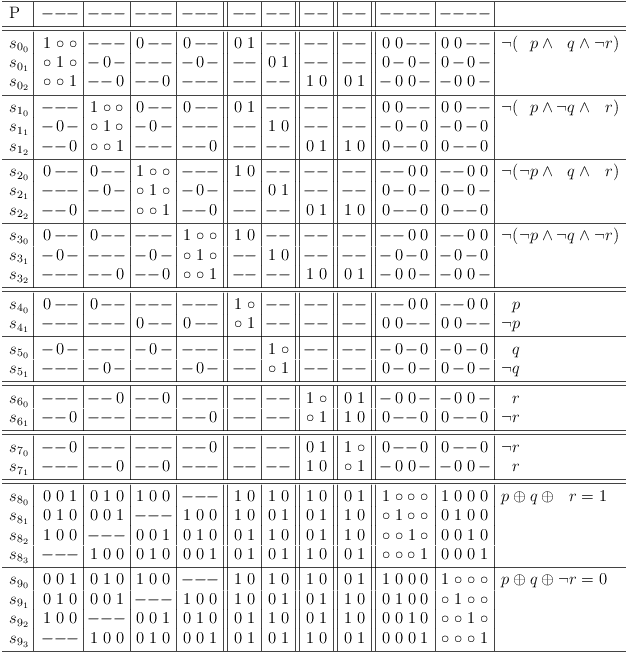

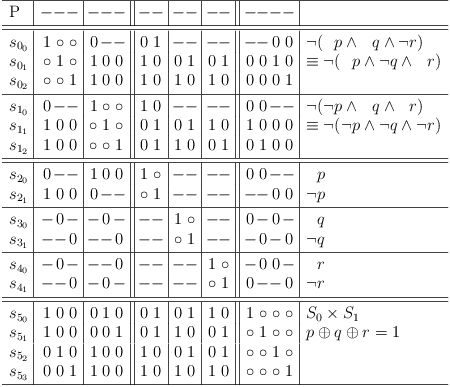

Superset/Identity Detection

The result from Single Selection 3 Vars is used to show how superset/identity detection works.

Single Selection 3 Vars from CNF, RUA, identity detection (standard):

Standard Superset/Identity Detection

Single Selection 3 Vars from CNF, RUA, identity detection. Use the longest possible superset relation. If there are more than 1, arbitrarily select one:

Superset/Identity Detection, Step 1

Single Selection 3 Vars from CNF, RUA, identity detection. Use the longest possible superset relation:

Superset/Identity Detection, Step 2

Single Selection 3 Vars from CNF, RUA, identity detection. Use the longest possible superset relation:

Superset/Identity Detection, Step 3

In case you did not get the joke: I really consider the "full truth table" to be the half-truth table.

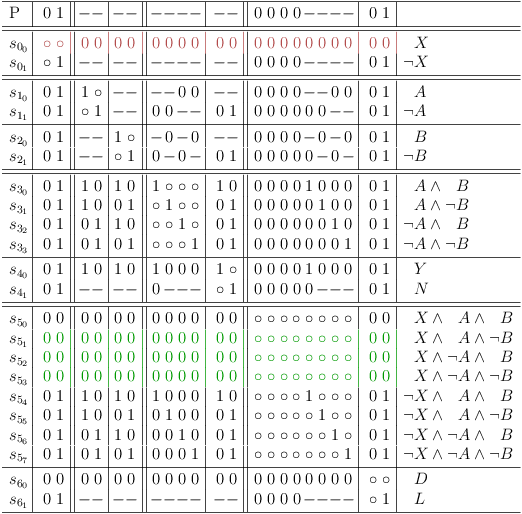

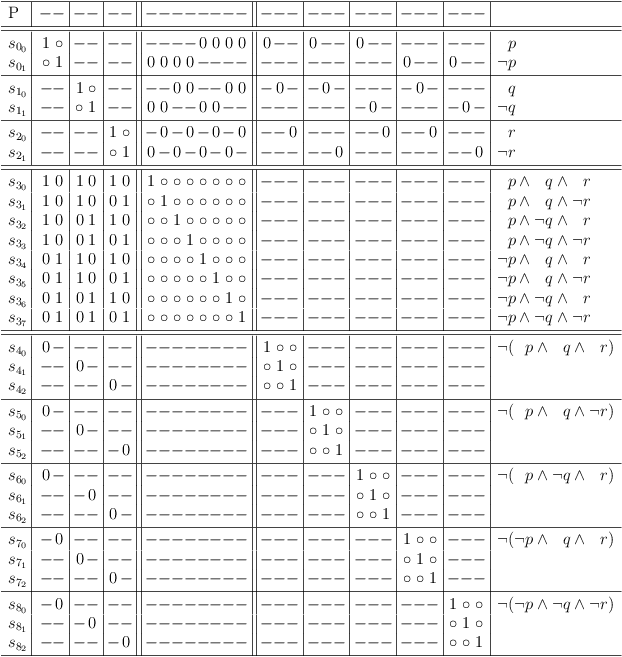

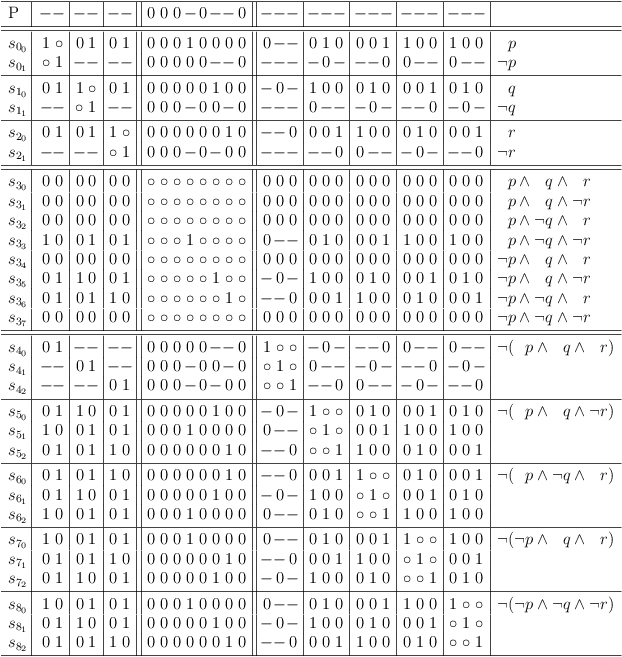

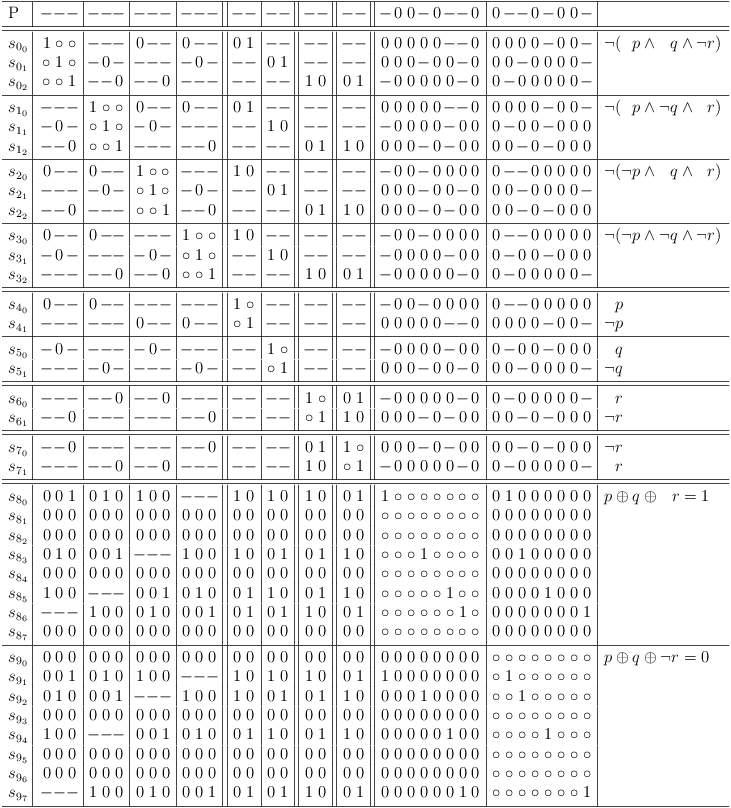

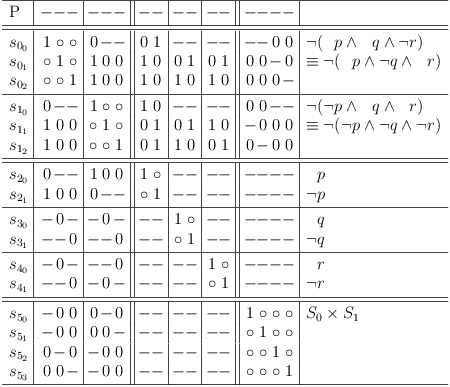

XOR

XOR 3 Vars, CNF

XOR 3 Vars, CNF

XOR 3 Vars, CNF, add negated view of variable r , prepare combinations for p × q × r and p × q × ¬r

XOR 3 Vars, CNF negated variable r , prepare combinations for p × q × r , p × q × ¬r

XOR 3 Vars, CNF, variable combinations, RUA

XOR 3 Vars, CNF, variable combinations, RUA

XOR 3 Vars, CNF, variable combinations, RUA, remove killed rows

XOR 3 Vars, CNF, variable combinations, RUA, remove killed rows

XOR 3 Vars, CNF with conflict bias

XOR 3 Vars, CNF with conflict bias

XOR 3 Vars, CNF with conflict bias, redundancy removal

XOR 3 Vars, CNF with conflict bias, redundancy removal

XOR 3 Vars, CNF with conflict bias, redundancy removal, prepare S0 × S1

XOR 3 Vars, CNF with conflict bias, redundancy removal, prepare S0 × S1

XOR 3 Vars, CNF with conflict bias, S0 × S1 , RUA

XOR 3 Vars, CNF with conflict bias, S0 × S1 , RUA

Philosophy

Contradictions

The usual handling of contradictions is to throw away the "useless" information. Well, if that is the case, contradictions are in fact useless.

But what, if the information is preserved and not thrown away?

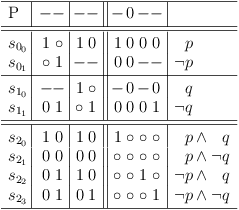

Let's have a look at a contradiction:

// | || S|| 0| 0|| 0 0 | 0 0 || 0 0 0 0 ||

// +----++----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 1|| | || 1 * | 0 0 || 0 0 0 0 || \hphantom{\neg} p

// | | 2|| | || * 1 | 0 0 || 0 0 0 0 || \neg p

// +-----+----++---+----++-----+-----++---------++

// | | 3|| | || 0 0 | 1 * || 0 0 0 0 || \hphantom{\neg} q

// | | 4|| | || 0 0 | * 1 || 0 0 0 0 || \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 5|| | || 0 0 | 0 0 || 1 * * * || \hphantom{\neg} p \wedge \hphantom{\neg} q

// | | 6|| | || 0 0 | 0 0 || * 1 * * || \hphantom{\neg} p \wedge \neg q

// | | 7|| | || 0 0 | 0 0 || * * 1 * || \neg p \wedge \hphantom{\neg} q

// | | 8|| | || 0 0 | 0 0 || * * * 1 || \neg p \wedge \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// |:info:| move point inside and press `C-u M-x satoku-mode RET'

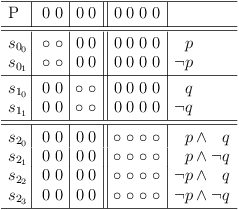

Sure, it does not seem very useful, but if the meta information (comments at the side) is available, it can be restored. First clear all conflicts (except LNC conflicts):

// | || S|| 0| 0|| 0 0 | 0 0 || 0 0 0 0 ||

// +----++----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 1|| | || 1 * | _ _ || _ _ _ _ || \hphantom{\neg} p

// | | 2|| | || * 1 | _ _ || _ _ _ _ || \neg p

// +-----+----++---+----++-----+-----++---------++

// | | 3|| | || _ _ | 1 * || _ _ _ _ || \hphantom{\neg} q

// | | 4|| | || _ _ | * 1 || _ _ _ _ || \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 5|| | || _ _ | _ _ || 1 * * * || \hphantom{\neg} p \wedge \hphantom{\neg} q

// | | 6|| | || _ _ | _ _ || * 1 * * || \hphantom{\neg} p \wedge \neg q

// | | 7|| | || _ _ | _ _ || * * 1 * || \neg p \wedge \hphantom{\neg} q

// | | 8|| | || _ _ | _ _ || * * * 1 || \neg p \wedge \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// |:info:| move point inside and press `C-u M-x satoku-mode RET'

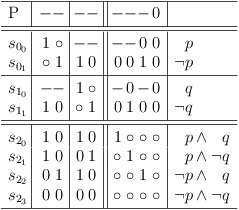

Then recreate the conditions from the meta information:

// | || S|| 0| 0|| 0 0 | 0 0 || 0 0 0 0 ||

// +----++----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 1|| | || 1 * | _ _ || _ _ 0 0 || \hphantom{\neg} p

// | | 2|| | || * 1 | _ _ || 0 0 _ _ || \neg p

// +-----+----++---+----++-----+-----++---------++

// | | 3|| | || _ _ | 1 * || _ 0 _ 0 || \hphantom{\neg} q

// | | 4|| | || _ _ | * 1 || 0 _ 0 _ || \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 5|| | || _ 0 | _ 0 || 1 * * * || \hphantom{\neg} p \wedge \hphantom{\neg} q

// | | 6|| | || _ 0 | 0 _ || * 1 * * || \hphantom{\neg} p \wedge \neg q

// | | 7|| | || 0 _ | _ 0 || * * 1 * || \neg p \wedge \hphantom{\neg} q

// | | 8|| | || 0 _ | 0 _ || * * * 1 || \neg p \wedge \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// |:info:| move point inside and press `C-u M-x satoku-mode RET'

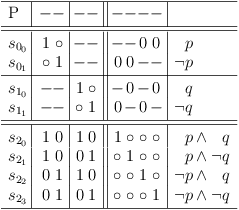

And update requirements:

// | || S|| 0| 0|| 0 0 | 0 0 || 0 0 0 0 ||

// +----++----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 1|| | || 1 * | _ _ || _ _ 0 0 || \hphantom{\neg} p

// | | 2|| | || * 1 | _ _ || 0 0 _ _ || \neg p

// +-----+----++---+----++-----+-----++---------++

// | | 3|| | || _ _ | 1 * || _ 0 _ 0 || \hphantom{\neg} q

// | | 4|| | || _ _ | * 1 || 0 _ 0 _ || \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// | | 5|| | || 1 0 | 1 0 || 1 * * * || \hphantom{\neg} p \wedge \hphantom{\neg} q

// | | 6|| | || 1 0 | 0 1 || * 1 * * || \hphantom{\neg} p \wedge \neg q

// | | 7|| | || 0 1 | 1 0 || * * 1 * || \neg p \wedge \hphantom{\neg} q

// | | 8|| | || 0 1 | 0 1 || * * * 1 || \neg p \wedge \neg q

// +-----+----++---+----++-----+-----++---------++

// +-----+----++---+----++-----+-----++---------++

// |:info:| move point inside and press `C-u M-x satoku-mode RET'

And voila! the information is resurrected.

Here is a better example, where a contradiction is caught in the act:

\|:todo:|

Conclusions

Conspiracy theory:

Aristotle came up with a devious plan (logic) to hide the true nature of an asymmetric conflict by abstracting it into the simple atomic duality of True and False.

He watched with evil pride, how his plan fruitioned ...

But plagued by a guilty conscience, he revealed shortly before his death that logic does not apply to future events.

However, the damage was done and logic was already unleashed upon the world. His students really liked the symmetries of mathematics and went on to ignore the true asymmetry of conflicts.

Funny perspective:

Aristotle came up with a funny joke, by postulating that all there was to know about a conflict was whether it is True or False. When he realized, that nobody got the point, he explained the joke by saying that logic does not apply to future events. However, people still did not get the joke. That's what happens, when you have a weird sense of humor.

Philosophical view:

Aristotle was a Zen-Buddhist who constructed logic based on the minimal contradiction as a typical Zen lesson to illustrate the fallacy of future contingents.

Copyright

Copyright (C) 2013, Wolfgang Scherer, <Wolfgang.Scherer@gmx.de>. See the document source for conditions of use under the GNU Free Documentation License.